If you’re in the know, you might’ve heard of the AGI leaker Jimmy Apples recently. After having made several correct leaks in the past and having taken the AI community by storm after announcing AGI, he disappeared off of Twitter. In this video I’ll describe what happened and how credible this person is and whether OpenAI or Deepmind are the ones who are in the posession of AGI.

The Jimmy Apples leak document: https://docs.google.com/document/d/1K–sU97pa54xFfKggTABU9Kh…gN3Rk/edit.

–

TIMESTAMPS:

00:00 News from Jimmy Apples.

00:30 The AGI Leak Recap.

01:58 Why this AGI leak is real.

04:15 Why this leak is scary.

06:13 What speaks against the leak.

–

#neuralink #ai #elonmusk

Category: robotics/AI – Page 902

Sam Altman Is the Oppenheimer of Our Age

This article was featured in One Great Story, New York’s reading recommendation newsletter. Sign up here to get it nightly.

This past spring, Sam Altman, the 38-year-old CEO of OpenAI, sat down with Silicon Valley’s favorite Buddhist monk, Jack Kornfield. This was at Wisdom 2.0, a low-stakes event at San Francisco’s Yerba Buena Center for the Arts, a forum dedicated to merging wisdom and “the great technologies of our age.” The two men occupied huge white upholstered chairs on a dark mandala-backed stage. Even the moderator seemed confused by Altman’s presence.

“What brought you here?” he asked.

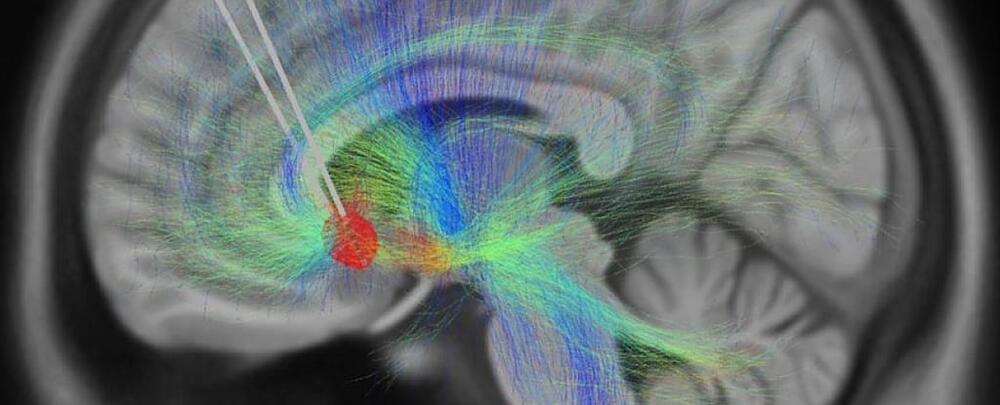

AI Identifies Brain Signals Associated With Recovering From Depression

It could soon be possible to measure changes in depression levels like we can measure blood pressure or heart rate.

In a new study, 10 patients with depression that had resisted treatment were enrolled in a six-month course of deep brain stimulation (DBS) therapy. Previous results from DBS have been mixed, but help from artificial intelligence could soon change that.

Success with DBS relies on stimulating the right tissue, which means getting accurate feedback. Currently, this is based on patients reporting their mood, which can be affected by stressful life events as much as it can be the result of neurological wiring.

AI Can Predict Future Heart Attacks By Analyzing CT Scans

An artificial intelligence platform developed by an Israeli startup can reveal whether a patient is at risk of a heart attack by analyzing their routine chest CT scans.

Results from a new study testing Nanox. AI’s HealthCCSng algorithm on such scans found that 58 percent of patients unknowingly had moderate to severe levels of coronary artery calcium (CAC) or plaque.

CAC is the strongest predictor of future cardiac events, and measuring it typically subjects patients to an additional costly scan that is not normally covered by insurance companies.

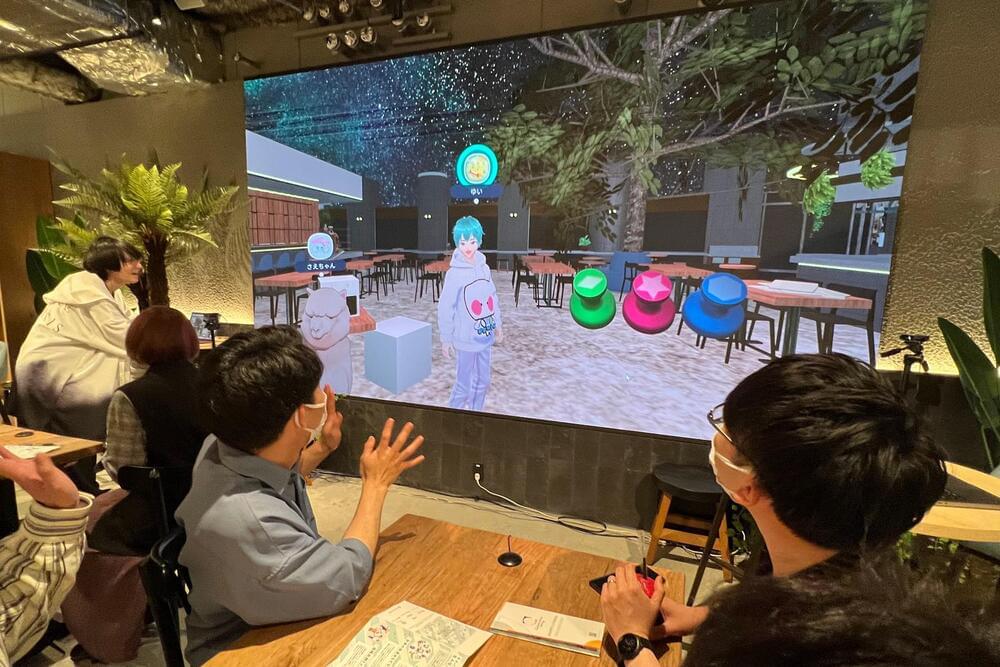

Changing reality with virtual technologies

From attending a meeting to enjoying a live performance or, perhaps, taking a class at the University of Tokyo’s Metaverse School of Engineering, the application of virtual reality is expanding in our daily lives. Earlier this year, virtual reality technologies garnered attention as tech giants, including Meta and Apple, unveiled new VR/AR (virtual reality/augmented reality) headsets. We spoke with VR and AR specialist Takuji Narumi, an associate professor at the Graduate School of Information Science and Technology, to learn about his latest research and what VR’s future has to offer.

At the Avatar Robot Café DAWN ver. β, employees serve customers via a digital screen and engage in conversation using avatars of their choice, such as an alpaca and a man with blue hair.

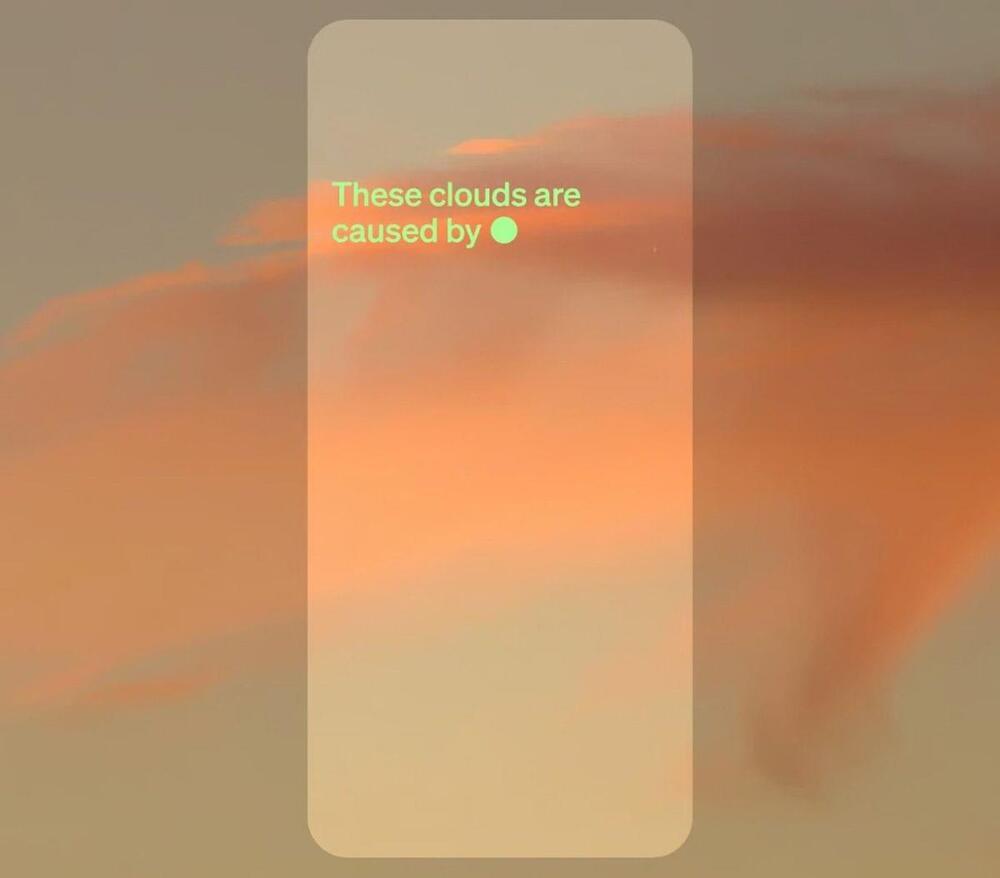

ChatGPT’s New Upgrade Teases AI’s Multimodal Future

ChatGPT isn’t just a chatbot anymore.

OpenAI’s latest upgrade grants ChatGPT powerful new abilities that go beyond text. It can tell bedtime stories in its own AI voice, identify objects in photos, and respond to audio recordings. These capabilities represent the next big thing in AI: multimodal models.

“Multimodal is the next generation of these large models, where it can process not just text, but also images, audio, video, and even other modalities,” says Dr. Linxi “Jim” Fan, Senior AI Research Scientist at Nvidia.

OpenAI’s chatbot learns to carry a conversation—and expect competition.

Micro Robot Disregards Gears, Embraces Explosions

Researchers at Cornell University have developed a tiny, proof of concept robot that moves its four limbs by rapidly igniting a combination of methane and oxygen inside flexible joints.

The device can’t do much more than blow each limb outward with a varying amount of force, but that’s enough to be able to steer and move the little unit. It has enough power to make some very impressive jumps. The ability to navigate even with such limited actuators is reminiscent of hopped-up bristebots.

Electronic control of combustions in the joints allows for up to 100 explosions per second, which is enough force to do useful work. The prototype is only 29 millimeters long and weighs only 1.6 grams, but it can jump up to 56 centimeters and move at almost 17 centimeters per second.

How to Use ChatGPT’s New Image Features

OpenAI recently announced an upgrade to ChatGPT (Apple, Android) that adds two features: AI voice options to hear the chatbot responding to your prompts, and image analysis capabilities. The image function is similar to what’s already available for free with Google’s Bard chatbot.

Even after hours of testing the limits and capabilities of ChatGPT, OpenAI’s chatbot still manages to surprise and scare me at the same time. Yes, I was quite impressed with the web browsing beta offered through ChatGPT Plus, but I remained anxious about the tool’s ramifications for people who write for money online, among many other concerns. The new image feature arriving for OpenAI’s subscribers left me with similarly mixed feelings.

While I’ve not yet had the opportunity to experiment with the new audio capabilities (other great reporters on staff have), I was able to test the soon-to-arrive image features. Here’s how to use the new image search coming to ChatGPT and some tips to help you start out.